使用BigQuery的第一步,原則上就是開始把資料丟上BigQuery... 在Google Cloud Platform中有許多產品已經支援直接upload到BigQuery中,可以參考官方網站的說明... 這邊介紹的是如何將現成的JSON data匯入到BigQuery中(因為CSV相對簡單許多,這邊不贅述CSV的載入),使用到的工具是gsutil,建議可使用Cloud Platform整合版本的(這邊可以下載)

BigQuery原則上吃csv與json兩種格式,其中json格式與標準的json有些許不同,他強調的是NEWLINE_DELIMITED_JSON,也就是說,每筆row之間是使用斷行隔開,而且每筆row就是獨立的一筆json資料欄... 但有別於csv,json格式的檔案是允許內部再有子階層的資料型態,以本範例為例,items為新的一個json array,可想像成RDBMS中的detail table資料...

基本的bq操作指令如上面所示,因為預設是load CSV,所以在load json時候需要多加上"--source_format NEWLINE_DELIMITED_JSON"的提示,然後依序再帶入json data file與schema file讓bq可以載入檔案。

JSON Data Schema Definition

在修改items欄位的定義之後,正確載入json的格式如下:

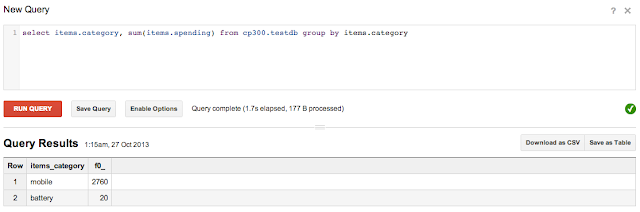

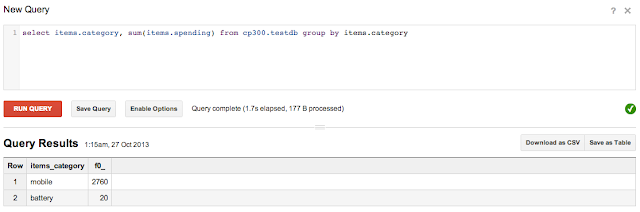

而正確的載入資料之後,就可以使用BigQuery的Portal來做資料的查詢,而階層式的資料查詢在BigQuery中會自動的做flatten的動作,因此呈現的資料表仍然是1個column,但是資料欄位名稱會以"."的方式將主欄位與階層欄位呈現出來,如下所示:

Sample Schema (1 record only)

{"transaction_time": "2013-10-20T14:32:22","transaction_id": 50124,"total_spending": 300,"coupon_discount": 20,"items": [{"name": "xt920","category": "mobile","quantity": 10,"spending": 320},{"name": "xt920b","category": "battery","quantity": 11,"spending": 20},{"name": "xt921","category": "mobile","quantity": 10,"spending": 200}]}

上面資料是經過排版的結果,實際資料長相如下:

{"transaction_time":"2013-10-20T14:32:22","transaction_id":50124,"total_spending":300,"coupon_discount":20,"items":[{"name":"xt920","category":"mobile","quantity":10,"spending":320},{"name":"xt920b","category":"battery","quantity":11,"spending":20},{"name":"xt921","category":"mobile","quantity":10,"spending":200}]}...(next data)

bq指令 - for JSON data

bq load --source_format NEWLINE_DELIMITED_JSON [target dataset].[target table] [json data] [schema data]

不論是CSV或是JSON都需要針對傳入的資料做欄位定義的動作,欄位的定義會方便BigQuery判斷查詢與製作索引,以JSON資料而言,定義檔也是json format,但是是json array,array中帶入每筆欄位的name(名稱), mode(是否可以為空、是否為集合型態等), type等資訊,其中mode為集合型態的話,則會多個欄位叫field的來收容階層資料資訊...

而載入資料若錯誤,系統會提示錯誤的內容,可再依照錯誤內容做修改... 以上面Sample Data為例,資料具有階層式的特性,下面先展示兩個錯誤的schema定義,在載入時候的錯誤提示:

Error1: Load File with “non-repeated field” error

$ cat schema.json

[

{"name":"transaction_time","mode":"nullable","type":"string"},

{"name":"transaction_id","mode":"required","type":"integer"},

{"name":"total_spending","mode":"required","type":"integer"},

{"name":"coupon_discount","mode":"required","type":"integer"},

{"name":"items","mode":"required","type":"record", "fields": [

{"name":"name","mode":"required","type":"string"},

{"name":"category","mode":"required","type":"string"},

{"name":"quantity","mode":"required","type":"integer"},

{"name":"spending","mode":"required","type":"integer"},

{"name":"descript","mode":"required","type":"string"}

]}

]

$ bq load --source_format NEWLINE_DELIMITED_JSON cp300.testdb ./sample.json ./schema.json

Waiting on bqjob_r3d39c5449048c912_00000141df277e2c_1 ... (25s) Current status: DONE

BigQuery error in load operation: Error processing job 'mitac-cp300:bqjob_r3d39c5449048c912_00000141df277e2c_1': Too many errors encountered. Limit is: 0.

Failure details:

- array specified for non-repeated field

Error2: Load File with “missing required field(s)” error

$ cat schema.json

[

{"name":"transaction_time","mode":"nullable","type":"string"},

{"name":"transaction_id","mode":"required","type":"integer"},

{"name":"total_spending","mode":"required","type":"integer"},

{"name":"coupon_discount","mode":"required","type":"integer"},

{"name":"items","mode":"repeated","type":"record", "fields": [

{"name":"name","mode":"required","type":"string"},

{"name":"category","mode":"required","type":"string"},

{"name":"quantity","mode":"required","type":"integer"},

{"name":"spending","mode":"required","type":"integer"},

{"name":"descript","mode":"required","type":"string"}

]}

]

$ bq load --source_format NEWLINE_DELIMITED_JSON cp300.testdb ./sample.json ./schema.json

Waiting on bqjob_r128c022a9d7e9a45_00000141df299024_1 ... (16s) Current status: DONE

BigQuery error in load operation: Error processing job 'mitac-cp300:bqjob_r128c022a9d7e9a45_00000141df299024_1': Too many errors encountered. Limit is: 0.

Failure details:

- missing required field(s)

Finish Load JSON Data

$ cat schema.json

[

{"name":"transaction_time","mode":"nullable","type":"string"},

{"name":"transaction_id","mode":"required","type":"integer"},

{"name":"total_spending","mode":"required","type":"integer"},

{"name":"coupon_discount","mode":"required","type":"integer"},

{"name":"items","mode":"repeated","type":"record", "fields": [

{"name":"name","mode":"required","type":"string"},

{"name":"category","mode":"required","type":"string"},

{"name":"quantity","mode":"required","type":"integer"},

{"name":"spending","mode":"required","type":"integer"},

{"name":"descript","mode":"nullable","type":"string"}

]}

]

$ bq load --source_format NEWLINE_DELIMITED_JSON cp300.testdb ./sample.json ./schema.json

Waiting on bqjob_r2110b4bab734de17_00000141df2a4271_1 ... (69s) Current status: DONE

查詢的當下,也可以此方式指定條件或group by等等的方式。

Reference

- Sample json data: https://developers.google.com/bigquery/docs/personsData.json

- Sample json schema: https://developers.google.com/bigquery/docs/personsDataSchema.json

留言

張貼留言